Available in the May CTP but release today on the 9th of August, an update of the AppFabric Service Bus containing the wonderful Queueus and Topics.

How to implement the great pub/sub mechanism by using Topics. Topics enable us to implement a 1-to-many messaging solution where the rules of filtering are NOT in your application (or database or whatsoever) but just like ACS, configuration on the Service Bus. It enables you to abstract messaging logic from your app to the bus instead of implementing complex rules in your application logic.

Imagine your application running on Azure (a workerrole) that is being diagnosed by using Performance Counters. These are collected locally and flushed to storage every configurable period. Now imagine your system administrators, responsible for monitoring your cloud assets, being at home but still need to be notified on events. Imagine 2 system administrators both having WP7 being the subscribers to your messaging solution. The first admin is responsible for scaling up and down your Windows Azure App (a workerrole doing lots of calculations) while the second system admin (who is the manager) is only to be notified on the actual scaling up and scaling down events. The first system admin will receive messages showing him averages of CPU utilization and free memory per hour. Based on this, he can decide to scale up or down. The example in the next blogpost below show how to setup the Service Bus and how to create this pub/sub mechanism.

I'll finish the next one in a few days.

Thursday, September 8, 2011

Tuesday, June 21, 2011

Playing with IoC, Enterprise Library 5.0 and Azure

After some discussion with a fellow tweeter (thanks to Amit Bahree @bahree) I decided to write a bit on IoC, DI combined with the full force of Azure. Recently i wrote about the principle of a "Generic Worker", being a worker role on Azure that is able to dynamically load and unload assemblies and fully utilize every dollar you pay for Azure. The solution was pretty straightforward.

The next step in the Generic Worker is to use IoC and DI and fully decouple workerrole plumbing from the actual functionality. Using IoC also makes it easy to configure your workerrole(s) and e.g. dynamically add/remove aspects (AOP) to your applications. The power of AOP is weaving the mixed behaviors together. Apply different aspects to change behavior and functionality of classes without using techniques like inheritance.

The first step i take is to extend the basic Unity behaviour and write my own Resolve method to resolve types not loaded in my appdomain but actual types that reside in my assembly blob storage. Follow the next steps to accomplish completely uncoupled software that makes use of Blob Storage and Unity.

1. Create a classlibrary that contains the interfaces for your classes to be loosely coupled.

public interface ICalculation

{

int Addition(int a, int b);

}

2. Create a classlibary that has a concrete implementation of this interface. This class implements one or more of the interfaces you defined in the classlibrary you created in step 1.

public class DefaultCalculation : ICalculation

{

public int Addition(int a, int b)

{

return a + b;

}

}

3. Build your classlibrary containing the implementation. Take the assembly and upload it somewhere in your Azure Blob-o-sphere Storage. See this screenshot.

You can see the assembly is in my assemblyline storage account and assemblies container.

4. Extent the Unity container with your own method that Resolves in a different way. Not trying to find implementations somewhere in current appdomain but actually take assemblies from Blobstorage and load them. This code runs in my workerrole that's supposed to be awfully generic.

using (IUnityContainer container = new UnityContainer())

{

container.ResolveFromBlobStorage();

}

I will update my next code with a fancy LINQ query but no time right now.

After this code the Unity container is extended with the method ResolveFromBlobStorage.

Step 5 and final:

The ResolveFromBlobStorage method makes it possible to have concrete implementations outside of my solution somewhere and stuffed away in blobstorage. I only need the interface that's it!

Happy programming!

The next step in the Generic Worker is to use IoC and DI and fully decouple workerrole plumbing from the actual functionality. Using IoC also makes it easy to configure your workerrole(s) and e.g. dynamically add/remove aspects (AOP) to your applications. The power of AOP is weaving the mixed behaviors together. Apply different aspects to change behavior and functionality of classes without using techniques like inheritance.

The first step i take is to extend the basic Unity behaviour and write my own Resolve method to resolve types not loaded in my appdomain but actual types that reside in my assembly blob storage. Follow the next steps to accomplish completely uncoupled software that makes use of Blob Storage and Unity.

1. Create a classlibrary that contains the interfaces for your classes to be loosely coupled.

public interface ICalculation

{

int Addition(int a, int b);

}

2. Create a classlibary that has a concrete implementation of this interface. This class implements one or more of the interfaces you defined in the classlibrary you created in step 1.

public class DefaultCalculation : ICalculation

{

public int Addition(int a, int b)

{

return a + b;

}

}

3. Build your classlibrary containing the implementation. Take the assembly and upload it somewhere in your Azure Blob-o-sphere Storage. See this screenshot.

You can see the assembly is in my assemblyline storage account and assemblies container.

4. Extent the Unity container with your own method that Resolves in a different way. Not trying to find implementations somewhere in current appdomain but actually take assemblies from Blobstorage and load them. This code runs in my workerrole that's supposed to be awfully generic.

using (IUnityContainer container = new UnityContainer())

{

container.ResolveFromBlobStorage

}

I will update my next code with a fancy LINQ query but no time right now.

public static void ResolveFromBlobStorage<T>(this IUnityContainer container) where T : class

{

CloudStorageAccount csa = new CloudStorageAccount(

new StorageCredentialsAccountAndKey("assemblyline", "here goes your key"),

true);

//take the assemblies from Blob Storage

CloudBlobContainer cbc = csa.CreateCloudBlobClient().GetContainerReference("assemblies");

var assemblies = (from blobs in cbc.ListBlobs()

select blobs);

foreach (IListBlobItem assembly in assemblies)

{

byte[] byteStream = cbc.GetBlobReference(assembly.Uri.AbsoluteUri).DownloadByteArray();

//load the assembly from blob into currentdomain.

AppDomain.CurrentDomain.Load(byteStream);

foreach (Assembly currentAssembly in AppDomain.CurrentDomain.GetAssemblies())

{

foreach (var type in currentAssembly.GetTypes())

{

if (!typeof(T).IsAssignableFrom(type) || type.IsInterface)

continue;

container.RegisterType(typeof(T), type, new ContainerControlledLifetimeManager());

}

}

}

After this code the Unity container is extended with the method ResolveFromBlobStorage.

Step 5 and final:

using (IUnityContainer container = new UnityContainer())

{

container.ResolveFromBlobStorage<ICalculation>();

ICalculation math = container.Resolve<ICalculation>();

Console.WriteLine(String.Format("adding 2 and 3 makes : {0}", math.Addition(2 , 3).ToString()));

}

The ResolveFromBlobStorage method makes it possible to have concrete implementations outside of my solution somewhere and stuffed away in blobstorage. I only need the interface that's it!

Happy programming!

Labels:

azure,

enterprise library 5.0,

injection,

IoC,

unity

Wednesday, June 15, 2011

Manage Windows Azure AppFabric Cache and some other considerations

The Windows Azure AppFabric Caching is a very powerful and easy-to-use mechanims that can speed up your applications and enhance performance and user experience.

It's Windows Server Cache but different

The Azure Caching contains a subset of features from the Server Appfabric. Developing for both requires the Microsoft.ApplicationServer.Caching namespace. You can use the same API but with some differences (isn't that a shame! because without this it would be a matter of deployment instead of an architectural decision). Differences are e.g. anything with regions, notifications and tags are not available (yet). The maximum size for a serialized object in Azure Cache is 8Mb. Furthermore, since it's cloud you don't manage or influence the cache directly So if you want to develop multiplatform for both azure & onpremise you need to differentiate on these issues and design for it. Always design for missing items in cache since you are not in charge (but the Azure Overlord is) and items might be gone for one reason or another especially in cases when you go beyound your cache limit.

Expiration of Windows Azure cache is not default behaviour so least used items are ousted when cache reaches it's limit. Remember that you can add items with a expiration date/time to overrule this default behaviour.

cache.Add(key, data, TimeSpan.FromHours(1));

It's obvious that this statement will cause my "data" to expire after one hour.

Keep in mind that using Windows Azure Caching you have caching on the tap and keeps you away from plumbing your own cache. Keeps you focused on the application itself while you just 'enable' caching in Azure and start using it. Fast access, massive scalability especially compared to SQL (Azure), one layer that provices cache access and a very easy, understable pricing structure.

A good alternative even for onpremise applications!

It's Windows Server Cache but different

The Azure Caching contains a subset of features from the Server Appfabric. Developing for both requires the Microsoft.ApplicationServer.Caching namespace. You can use the same API but with some differences (isn't that a shame! because without this it would be a matter of deployment instead of an architectural decision). Differences are e.g. anything with regions, notifications and tags are not available (yet). The maximum size for a serialized object in Azure Cache is 8Mb. Furthermore, since it's cloud you don't manage or influence the cache directly So if you want to develop multiplatform for both azure & onpremise you need to differentiate on these issues and design for it. Always design for missing items in cache since you are not in charge (but the Azure Overlord is) and items might be gone for one reason or another especially in cases when you go beyound your cache limit.

Expiration of Windows Azure cache is not default behaviour so least used items are ousted when cache reaches it's limit. Remember that you can add items with a expiration date/time to overrule this default behaviour.

cache.Add(key, data, TimeSpan.FromHours(1));

It's obvious that this statement will cause my "data" to expire after one hour.

Keep in mind that using Windows Azure Caching you have caching on the tap and keeps you away from plumbing your own cache. Keeps you focused on the application itself while you just 'enable' caching in Azure and start using it. Fast access, massive scalability especially compared to SQL (Azure), one layer that provices cache access and a very easy, understable pricing structure.

A good alternative even for onpremise applications!

Labels:

azure,

cache,

microsoft,

Windows Azure AppFabric Caching

Monday, May 30, 2011

Windows Azure AppFabric Cache next steps

A very straightforward of using Windows Azure Appfabric is to store records from a SQL Azure table (or another source of course).

Get access to your data cache (assuming your config settings are fine, see previous post).

List lookUpItems= null;

DataCache myDataCache = CacheFactory.GetDefaultCache();

lookUpItems = myDataCache.Get("MyLookUpItems") as List;

if (lookUpItems != null) //there is something in cache obviously

{

lookUpItems.Add("got these lookups from myDataCache, don't pick me");

}

else //get my items from my datasource and save it in cache.

{

LookUpEntities myContext = new LookUpEntitites(); //EF

var lookupItems = from lookupitem in myContext.LookUpItems

select lookupitem.ID, lookupitem.Value;

lookUpItems = lookupItems.Tolist();

/* assuming my static table with lookupitems might chance only once a day or so.Therefore, set the expiration to 1 day. This means that after one day after setting the cache item, the cache will expire and will return null */

myDataCache.Add("myLookupItems", lookUpItems , TimeSpan.FromDays(1));

}

Easy to use and very effective. The more complex and timeconsuming your query to your datasource (wherever and whatever it is) the more your performance will benefit from this approach. But, still consider the price you have to pay! The natural attitude of developing for Azure is always: consider the costs of your approach and try to minimiza bandwidth and storage transactions.

Use local caching for speed

You can use local client caching to truely speed up lookups. Remember that changing local cache actually changes the items and changes the items in your comboboxes e.g.

Get access to your data cache (assuming your config settings are fine, see previous post).

List

DataCache myDataCache = CacheFactory.GetDefaultCache();

lookUpItems = myDataCache.Get("MyLookUpItems") as List

if (lookUpItems != null) //there is something in cache obviously

{

lookUpItems.Add("got these lookups from myDataCache, don't pick me");

}

else //get my items from my datasource and save it in cache.

{

LookUpEntities myContext = new LookUpEntitites(); //EF

var lookupItems = from lookupitem in myContext.LookUpItems

select lookupitem.ID, lookupitem.Value;

lookUpItems = lookupItems.Tolist

/* assuming my static table with lookupitems might chance only once a day or so.Therefore, set the expiration to 1 day. This means that after one day after setting the cache item, the cache will expire and will return null */

myDataCache.Add("myLookupItems", lookUpItems , TimeSpan.FromDays(1));

}

Easy to use and very effective. The more complex and timeconsuming your query to your datasource (wherever and whatever it is) the more your performance will benefit from this approach. But, still consider the price you have to pay! The natural attitude of developing for Azure is always: consider the costs of your approach and try to minimiza bandwidth and storage transactions.

Use local caching for speed

You can use local client caching to truely speed up lookups. Remember that changing local cache actually changes the items and changes the items in your comboboxes e.g.

Friday, May 27, 2011

Windows Azure Azure AppFabric Cache Introduction

Windows Azure now offers a caching service. It's a cloud scaled caching mechanism that helps you speed up your cloudapps. It does exactly what a cache is supposed to do: offer high-speed access (and high availability & scalability, it's cloud after all) to all your application data.

How to setup caching?

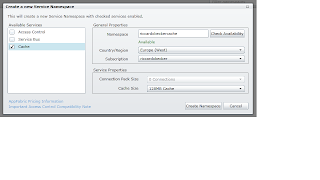

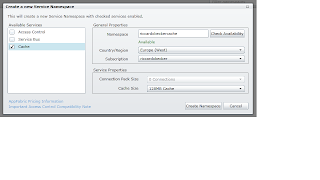

Click your Service Bus, Access Control & Caching tab on the left side of the Windows Azure portal. Click New Namespace from the toolbar and create the one you like and choose the appropriate size. See below.

After creation everything is arranged by the platform. Security, scalability, availability, access tokens etc.

After you created the Caching Namespace you are able to start using it. First of all add the correct references to the assemblies involved in Caching. You can find them in the Program Files\Windows Azure AppFabric SDK\V1.0\Assemblies\NET4.0\Cache folder. Select the Caching.Client and Caching.Core assemblies and voila. ASP.NET project also need the Microsoft.Web.DistributedCache assembly.

The easiest way to create access to your cache is to copy the configuration to you app.config (or web.config). Click the namespace of the cache in Azure Portal. Then click in the toolbar on View Client Configuration on copy the settings and paste them in your config file in visual studio. The settings look like this.

Create a consoleapp and copy code as below and voila, your scalable, highly available, cloudy cache is actually up and running!

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using Microsoft.ApplicationServer.Caching;

using Microsoft.ApplicationServer;

namespace ConsoleApplication1

{

class Program

{

static void Main(string[] args)

{

// Cache client configured by settings in application configuration file.

DataCacheFactory cacheFactory = new DataCacheFactory();

DataCache defaultCache = cacheFactory.GetDefaultCache();

// Add and retrieve a test object from the default cache.

defaultCache.Add("myuniquekey", "testobject");

string strObject = (string)defaultCache.Get("testkey");

}

}

}

What can you store in Cache? Actually everything as long as it's serializable.

Have fun with it and use the features of the Windows Azure platform!

How to setup caching?

Click your Service Bus, Access Control & Caching tab on the left side of the Windows Azure portal. Click New Namespace from the toolbar and create the one you like and choose the appropriate size. See below.

After creation everything is arranged by the platform. Security, scalability, availability, access tokens etc.

After you created the Caching Namespace you are able to start using it. First of all add the correct references to the assemblies involved in Caching. You can find them in the Program Files\Windows Azure AppFabric SDK\V1.0\Assemblies\NET4.0\Cache folder. Select the Caching.Client and Caching.Core assemblies and voila. ASP.NET project also need the Microsoft.Web.DistributedCache assembly.

The easiest way to create access to your cache is to copy the configuration to you app.config (or web.config). Click the namespace of the cache in Azure Portal. Then click in the toolbar on View Client Configuration on copy the settings and paste them in your config file in visual studio. The settings look like this.

Create a consoleapp and copy code as below and voila, your scalable, highly available, cloudy cache is actually up and running!

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using Microsoft.ApplicationServer.Caching;

using Microsoft.ApplicationServer;

namespace ConsoleApplication1

{

class Program

{

static void Main(string[] args)

{

// Cache client configured by settings in application configuration file.

DataCacheFactory cacheFactory = new DataCacheFactory();

DataCache defaultCache = cacheFactory.GetDefaultCache();

// Add and retrieve a test object from the default cache.

defaultCache.Add("myuniquekey", "testobject");

string strObject = (string)defaultCache.Get("testkey");

}

}

}

What can you store in Cache? Actually everything as long as it's serializable.

Have fun with it and use the features of the Windows Azure platform!

Tuesday, February 22, 2011

A Generic Worker beats all

Windows Azure will charge you for the amount of time your application is up (and maybe running). In order to fully utilize the resources that are at your disposal, you better be efficient with your worker roles in general. This blogpost will be the first in a row showing you how to gain maximum efficiency and still have your workerrole scalable.

The magic behind this is: a generic worker that can handle different tasks.

Consider a workerrole as a program running and in the beginning doing absolutely nothing but listening at a queue in your storage environment. This queue is the broker for your workerrole and will be fed by the outside world (your own apps of apps of others you will serve). What will be in the message is description of a task that the generic worker has to fulfill. The description also contains the location of an uploaded assembly in BLOB somewhere, parameters and other information that is needed for the specific task.

After noticing the message on the queue the workerrole will look for the assembly in blob, load it in an appdomain and start executing the task that is described in the message. It can be a longrunning calculation or a task that listens to another queue where it will be fed task specific messages. It can be a single task being executed just once or a task that will run forever (as long as the app is deployed of course). The workerrole loads the different assemblies and starts executing the different tasks on configurable intervals or when a new message arrives.

Remember, this is a rough description of a generic worker that can be utilized up to the 100%. That's what you need, after all you are paying for it. Don't worry about the CPU getting hot!

To keep this workerrole scalable new instances of the role will need to preload the assemblies already available in the first instance. This requires some administration but hey, that's why we have storage at our disposal. Imagine a generic worker role that has 10's of tasks running. Once task is to provide elasticity to itself! When overrunning a certain limit (CPU, max number of threads, a steep growing number of messages in queue(s)) it will scale itself up! Now that's what i call magic.

Next blog post will show you how the bare generic worker will look like.

The magic behind this is: a generic worker that can handle different tasks.

Consider a workerrole as a program running and in the beginning doing absolutely nothing but listening at a queue in your storage environment. This queue is the broker for your workerrole and will be fed by the outside world (your own apps of apps of others you will serve). What will be in the message is description of a task that the generic worker has to fulfill. The description also contains the location of an uploaded assembly in BLOB somewhere, parameters and other information that is needed for the specific task.

After noticing the message on the queue the workerrole will look for the assembly in blob, load it in an appdomain and start executing the task that is described in the message. It can be a longrunning calculation or a task that listens to another queue where it will be fed task specific messages. It can be a single task being executed just once or a task that will run forever (as long as the app is deployed of course). The workerrole loads the different assemblies and starts executing the different tasks on configurable intervals or when a new message arrives.

Remember, this is a rough description of a generic worker that can be utilized up to the 100%. That's what you need, after all you are paying for it. Don't worry about the CPU getting hot!

To keep this workerrole scalable new instances of the role will need to preload the assemblies already available in the first instance. This requires some administration but hey, that's why we have storage at our disposal. Imagine a generic worker role that has 10's of tasks running. Once task is to provide elasticity to itself! When overrunning a certain limit (CPU, max number of threads, a steep growing number of messages in queue(s)) it will scale itself up! Now that's what i call magic.

Next blog post will show you how the bare generic worker will look like.

Wednesday, February 9, 2011

VM role the sequel

After playing some time with the VM Role beta and stumbling upon strange problems, i found out that VM beta was activated on my CTP08 subscription and not on my regular one. In the Windows Azure portal, having the information uncollapsed, it looks like it's active :-)

Anyway, testing with the VM role on a small instance now. Using remote desktop and testing if using VM role as a replacement for my own local VM images running in our own datacenter is appropriate. So far, it's looking good. The only thing is: we are running stateless. This means that information that needs to be stored should be stored in a cloudway and not to disk or other local options. Use Azure Drive, TFS hosted somewhere, skydrive, dropbox or other cloudservices that let you save information in a reliable way. Saving your work, while running a VM role, on the C: drive might cause a serious loss of the role gets recycled or crashes and it brought up somewhere else (with yet another c: drive). Although the VM role was never invented for being pure IaaS, it's still a nice alternative that can be very usefull in some scenarios.

We'll continue and make some nice differencing disks with specific tools for specific users (developers, testers, desktop workers etc.) and see how it will work. Developing using VS2010 on a 8 core cloudy thing with 14 gig of internal memory is a blessing. Having your sources stored on Azure drive or alternatives and directly connect to your TFS environment by using Azure Connect combines the best of all worlds and gives you a flexible, cost effective but most of all quick way of setting up images and also tearing them down fast.....

Anyway, testing with the VM role on a small instance now. Using remote desktop and testing if using VM role as a replacement for my own local VM images running in our own datacenter is appropriate. So far, it's looking good. The only thing is: we are running stateless. This means that information that needs to be stored should be stored in a cloudway and not to disk or other local options. Use Azure Drive, TFS hosted somewhere, skydrive, dropbox or other cloudservices that let you save information in a reliable way. Saving your work, while running a VM role, on the C: drive might cause a serious loss of the role gets recycled or crashes and it brought up somewhere else (with yet another c: drive). Although the VM role was never invented for being pure IaaS, it's still a nice alternative that can be very usefull in some scenarios.

We'll continue and make some nice differencing disks with specific tools for specific users (developers, testers, desktop workers etc.) and see how it will work. Developing using VS2010 on a 8 core cloudy thing with 14 gig of internal memory is a blessing. Having your sources stored on Azure drive or alternatives and directly connect to your TFS environment by using Azure Connect combines the best of all worlds and gives you a flexible, cost effective but most of all quick way of setting up images and also tearing them down fast.....

Labels:

azure,

iaas,

microsoft,

visual studio,

vm role,

vs2010,

windows azure

Monday, January 24, 2011

VM Role considerations

After experimenting a lot getting the VM role to work a few considerations:

- Take some time (a lot of time actually) to prepare your image and follow all prerequisites on http://msdn.microsoft.com/en-us/library/gg465398.aspx. Two important steps to take: build a base image VHD which will be the parent of all your other differencing disks. Differencing disks contain the specific characteristics of the VM role to upload and run. Typically you won't run your base VHD (it's just W2008R2) but it's the differencing disks that have the value add. Think of a development environment containing Visual Studio and other tools for your developers and/or architects, a specific VHD for testers having the test version of VS2010 installed, desktop environments with just Office tooling etc.

- don't bother trying to upload your sysprep'd W2008R2 VHD from Windows 7 :-)

For some reasons after creating the VHD with all the necessary tools on it, the csupload still causes some Hyper-V magic to happen. The thing is, Hyper-V magic is not on Windows 7.

- Use the Set-Connection switch of the csupload app to set a "global" connection, written to disk, in your command session and take it from there.

- We started struggling from here concerning the actual csupload. The following message was displayed:

It tells me that the subscription doesn't have the VM role Beta enabled yet. The things is....i did!

I'll just continue the struggle and get it to work....if you have suggestions please let me know, here or on twitter @riccardobecker.

- Take some time (a lot of time actually) to prepare your image and follow all prerequisites on http://msdn.microsoft.com/en-us/library/gg465398.aspx. Two important steps to take: build a base image VHD which will be the parent of all your other differencing disks. Differencing disks contain the specific characteristics of the VM role to upload and run. Typically you won't run your base VHD (it's just W2008R2) but it's the differencing disks that have the value add. Think of a development environment containing Visual Studio and other tools for your developers and/or architects, a specific VHD for testers having the test version of VS2010 installed, desktop environments with just Office tooling etc.

- don't bother trying to upload your sysprep'd W2008R2 VHD from Windows 7 :-)

For some reasons after creating the VHD with all the necessary tools on it, the csupload still causes some Hyper-V magic to happen. The thing is, Hyper-V magic is not on Windows 7.

- Use the Set-Connection switch of the csupload app to set a "global" connection, written to disk, in your command session and take it from there.

- We started struggling from here concerning the actual csupload. The following message was displayed:

It tells me that the subscription doesn't have the VM role Beta enabled yet. The things is....i did!

I'll just continue the struggle and get it to work....if you have suggestions please let me know, here or on twitter @riccardobecker.

Labels:

azure,

iaas,

microsoft,

visual studio,

vm role,

vs2010,

windows azure

Tuesday, January 4, 2011

Things to consider when migrating to Azure part 2

Here some other issues i stumbled upon by selflearning and researching around migrating your current onpremise apps to Azure. As mentioned before, just having things run in the cloud is not that difficult, but having things run in a scalable, well designed, fully using the possibilities in Azure, in a cost efficient way is something different. Here are some other things to consider.

- To be able to meet the SLA's you need to assure that your app runs with a minimum of two instances (rolecount = 2 in your configuration file per deployment of web, worker or VM role)

- To make things easy as possible and make as few changes as possible consider using SQL Azure Migration Wizard to migrate onpremise databases to sql azure databases (http://sqlazuremw.codeplex.com/)

- Moving your intranet applications to Azure probably requires changes in your authentication code. While intranet apps commonly use AD for authentication, webapps in the cloud still can use your AD information but you need to setup AD federation or use a mechanism like Azure Connect to enable the use of AD in your cloud environment.

- After migrating your SQL Database to the Cloud you need to change your connectionstring but also realize that you need to "connect" to a database and that you cannot use

- To be able to meet the SLA's you need to assure that your app runs with a minimum of two instances (rolecount = 2 in your configuration file per deployment of web, worker or VM role)

- To make things easy as possible and make as few changes as possible consider using SQL Azure Migration Wizard to migrate onpremise databases to sql azure databases (http://sqlazuremw.codeplex.com/)

- Moving your intranet applications to Azure probably requires changes in your authentication code. While intranet apps commonly use AD for authentication, webapps in the cloud still can use your AD information but you need to setup AD federation or use a mechanism like Azure Connect to enable the use of AD in your cloud environment.

- After migrating your SQL Database to the Cloud you need to change your connectionstring but also realize that you need to "connect" to a database and that you cannot use

generic TableStorage

TableStorage is an excellent and scalable way to store your tabular data in a cheap way. Working with tables is easy and straightforward but writing classes for every single one of them is not necessary by using generics.

public class DynamicDataContext : TableServiceContext where T : TableServiceEntity

{

private CloudStorageAccount _storageAccount;

private string _entitySetName;

public DynamicDataContext(CloudStorageAccount storageAccount)

: base(storageAccount.TableEndpoint.AbsoluteUri, storageAccount.Credentials)

{

_storageAccount = storageAccount;

_entitySetName = typeof(T).Name;

var tableStorage = new CloudTableClient(_storageAccount.TableEndpoint.AbsoluteUri, _storageAccount.Credentials);

}

public void Add(T entityToAdd)

{

AddObject(_entitySetName, entityToAdd);

SaveChanges();

}

public void Update(T entityToUpdate)

{

UpdateObject(entityToUpdate);

SaveChanges();

}

public void Delete(T entityToDelete)

{

DeleteObject(entityToDelete);

SaveChanges();

}

public IQueryable Load()

{

return CreateQuery(_entitySetName);

}

}

This is all you need for addressing your tables, adding, updating and deleting entities. And best of all, unleashing Linq at your entities!

See here how to use it for example with your performancecounter data (WADPerformanceCountersTable) in your storage account.

Microsoft.WindowsAzure.StorageCredentialsAccountAndKey sca = new Microsoft.WindowsAzure.StorageCredentialsAccountAndKey("performancecounters",

"blablablablabla");

Microsoft.WindowsAzure.CloudStorageAccount csa = new Microsoft.WindowsAzure.CloudStorageAccount(sca, true);

var PerformanceCountersContext = new DynamicDataContext(csa);

//fire your Linq

var performanceCounters = (from perfCounterEntry in PerformanceCountersContext.Load()

where (perfCounterEntry.EventTickCount >= fromTicks) &&

(perfCounterEntry.EventTickCount <= toTicks) &&

(perfCounterEntry.CounterName.CompareTo(PerformanceCounterName) == 0)

select perfCounterEntry).ToList();

public class DynamicDataContext

{

private CloudStorageAccount _storageAccount;

private string _entitySetName;

public DynamicDataContext(CloudStorageAccount storageAccount)

: base(storageAccount.TableEndpoint.AbsoluteUri, storageAccount.Credentials)

{

_storageAccount = storageAccount;

_entitySetName = typeof(T).Name;

var tableStorage = new CloudTableClient(_storageAccount.TableEndpoint.AbsoluteUri, _storageAccount.Credentials);

}

public void Add(T entityToAdd)

{

AddObject(_entitySetName, entityToAdd);

SaveChanges();

}

public void Update(T entityToUpdate)

{

UpdateObject(entityToUpdate);

SaveChanges();

}

public void Delete(T entityToDelete)

{

DeleteObject(entityToDelete);

SaveChanges();

}

public IQueryable

{

return CreateQuery

}

}

This is all you need for addressing your tables, adding, updating and deleting entities. And best of all, unleashing Linq at your entities!

See here how to use it for example with your performancecounter data (WADPerformanceCountersTable) in your storage account.

Microsoft.WindowsAzure.StorageCredentialsAccountAndKey sca = new Microsoft.WindowsAzure.StorageCredentialsAccountAndKey("performancecounters",

"blablablablabla");

Microsoft.WindowsAzure.CloudStorageAccount csa = new Microsoft.WindowsAzure.CloudStorageAccount(sca, true);

var PerformanceCountersContext = new DynamicDataContext

//fire your Linq

var performanceCounters = (from perfCounterEntry in PerformanceCountersContext.Load()

where (perfCounterEntry.EventTickCount >= fromTicks) &&

(perfCounterEntry.EventTickCount <= toTicks) &&

(perfCounterEntry.CounterName.CompareTo(PerformanceCounterName) == 0)

select perfCounterEntry).ToList

Labels:

crud,

generics,

tableservicecontext,

tablestorage

Subscribe to:

Comments (Atom)