This blog post describes a nice demonstration possibility for Smart City concepts. It uses a few components:

- A demonstration game called Cities Skylines (CSL)

- A mod that can be build using Visual Studio 2015 and runs inside Cities Skylines

- Microsoft Azure Event Hub

- Stream Analytics

- PowerBI

1. Cities Skylines

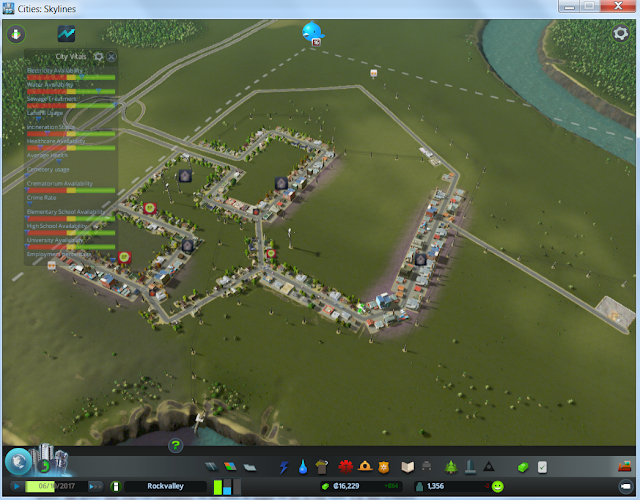

Cities Skylines is a simulation game that enables you to build roads, houses, industry, commercial buildings, water towers, electricty plants and much more objects. All together they form the "city" that is the enabler for this demonstration.

Cities Skylines offers the ability to build so called mods. In this demo, we are interested in some metadata of the city we are building and running.

2. Building a mod

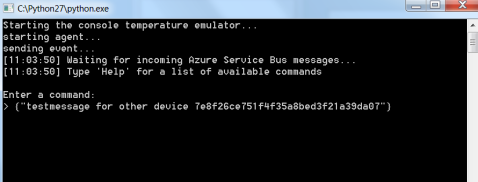

To build a mod for CSL, you need to use the .NET framework 3.5. For this .NET version there are no standard .NET libraries available to use Service Bus / Event Hub. I have create a wrapper around the available REST API. The most important method of this wrapper is SendTelemetry(object data).

public void SendTelemetry(object Data)

{

var sas = "";

WebClient client = new WebClient();

// Namespace info.

var serviceNamespace = ;

var hubName = ;

var url = new Uri(string.Format(@"https://{0}.servicebus.windows.net/{1}/publishers/{2}/messages", serviceNamespace, hubName,

client.Headers[HttpRequestHeader.Authorization] = sas;

client.Headers[HttpRequestHeader.KeepAlive] = "true";

var payload = SimpleJson.SimpleJson.SerializeObject(Data);

var result = client.UploadString(url, payload); //send the payload to the eventhub

}

The data that is send to the Event Hub is retrieved inside the mod:

var totalCapacity = DistrictManager.instance.m_districts.m_buffer[0].GetElectricityCapacity();

var totalConsumption = DistrictManager.instance.m_districts.m_buffer[0].GetElectricityConsumption();

var totalWaterCapacity = DistrictManager.instance.m_districts.m_buffer[0].GetWaterCapacity();

var totalWaterConsumption = DistrictManager.instance.m_districts.m_buffer[0].GetWaterConsumption();

var totalExport = DistrictManager.instance.m_districts.m_buffer[0].GetExportAmount();

var totalImport = DistrictManager.instance.m_districts.m_buffer[0].GetImportAmount();

var totalIncome = DistrictManager.instance.m_districts.m_buffer[0].GetIncomeAccumulation();

var totalSewageCapacity = DistrictManager.instance.m_districts.m_buffer[0].GetSewageCapacity();

var totalSewage = DistrictManager.instance.m_districts.m_buffer[0].GetSewageAccumulation();

var totalUnemployed = DistrictManager.instance.m_districts.m_buffer[0].GetUnemployment();

var totalWorkplace = DistrictManager.instance.m_districts.m_buffer[0].GetWorkplaceCount();

var totalWorkers = DistrictManager.instance.m_districts.m_buffer[0].GetWorkerCount();

3. Event Hub

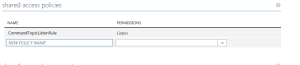

It is easy to create a event hub on the Azure portal (portal.azure.com) if you have an MSDN subscription available or a different subscription (free trial is also available). The Event Hub just needs to be setup.

4. Stream Analytics

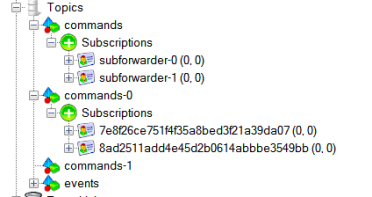

The stream analytics job takes the messages from the Event Hub that is being populated by the mod running in CSL. The SA job consists of three important concepts:

- Input: where does the information come from? In this case, it is the event hub that is created in step 3.

- Query: the query that will run constantly on the data in-flight on the Event Hub. For this scenario we take the average of different fields that are part of the message sent to the Event Hub by the CSL mod.

SELECT

System.Timestamp as EventDate,

AVG(TotalWaterCapacity) as TotalWaterCapacity,

AVG(TotalWaterConsumption) as TotalWaterConsumption,

AVG(TotalElectricityCapacity) as TotalElectricityCapacity,

AVG(TotalElectricityConsumption) as TotalElectricityConsumption,

AVG(TotalExport) as TotalExport,

AVG(TotalImport) as TotalImport,

AVG(TotalSewageCapacity) as TotalSewageCapacity,

AVG(TotalSewageAccumulation) as TotalSewageAccumulation,

AVG(TotalUnemployed) as TotalUnemployed,

AVG(TotalWorkplace) as TotalWorkplace,

AVG(TotalWorkers) as TotalWorkers,

AVG(TotalCarsInCongestion) as TotalCarsInCongestion,

AVG(TotalVehicles) as TotalVehicles

INTO

electricitybuilding

FROM

iotwatch

GROUP BY TUMBLINGWINDOW(SS,10), EventDate

- Output: the output for this SA job is PowerBI. So every message being processed by SA is pushed to our PowerBI environment we need to setup in step 5.

5. PowerBI

On app.powerbi.com you can create an online account for using PowerBI in this demo. Just create an account and you are of to go.

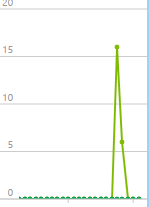

Once the game is running with the mod enabled, it starts sending the data to the event hub. The SA job processes that data and forwards it to your PowerBI environment. When the SA processes data succesfully there will be a dataset available in your PowerBI environment called "electricity". With this dataset we can create reports and pin them to our dashboard in PowerBI.

6. The Result

A great game running a city and a stunning dashboard in PowerBI that contains realtime data coming from the game!

The PowerBI output. You can see I demolished some buildings that cause some essential utilities to have not enough capacitiy. This causes shortages on electricity, water, sewage and a increasing unemployment rate!

If you need more detailed information on the specific steps, just let me know.

Enjoy!